Earpiece Technology: Patent Prosecution:

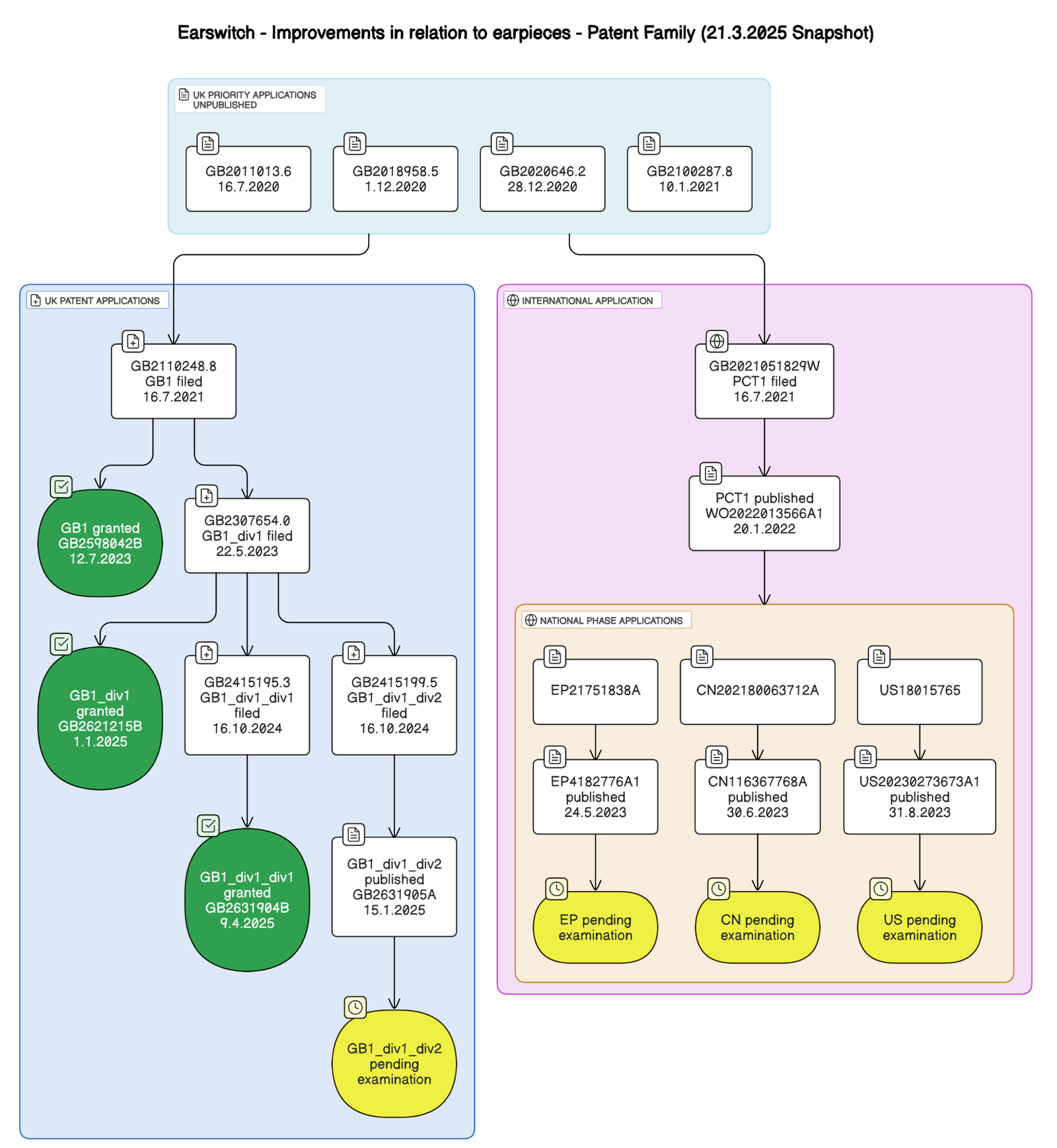

In July 2020, Manchester-based Earswitch Ltd filed UK priority application GB2011013.6; and three further UK priority applications GB2018958.5, GB2020646.2, and GB2100287.8, between December 2020 and January 2021 (‘UK priority applications’).

In July 2021, Earswitch Ltd subsequently filed GB1 (GB2110248.8), titled Improvements in or Relating to Earpieces claiming priority from the above-mentioned UK priority applications. In July 2023, GB1 was granted as UK patent GB2598042B, as depicted in the patent family tree diagram, above.

In May 2023, GB1 was used to form divisional application GB2307654.0 which was granted in January 2025 as GB2621215B (GB1_div1). GB1_div1 was in turn used as basis to form two further divisional applications; GB2415195.3 lodged in October 2024 and granted in April 2025 as GB2631904B (GB1_div1_div1), and GB2415199.5 also lodged in October 2024, published in January 2025 as GB2631905A (GB1_div1_div2) and is currently pending examination.

In July 2021, Earswitch Ltd also filed PCT1, an international application (GB2021051829W) at the UKIPO claiming priority from the UK priority applications, publishing as WO2022013566A1 in January 2022.

PCT1 led to the following national patent applications which are all currently pending examination:

- EP21751838A published as EP4182776A1 on 24.5.2023;

- CN202180063712A published as CN116367768A on 30.6.2023;

- US18/015,765 published as US2023/0273673A1 on 31.8.2023.

This article briefly summarizes the technology disclosed in the UK granted patent GB2598042B (GB1) [1] as parent of UK granted patent GB2621215B (GB1_div1) and grandparent of UK granted patent GB2631904B (GB1_div1_div1).

Ear-drums Respond to Eye Movements:

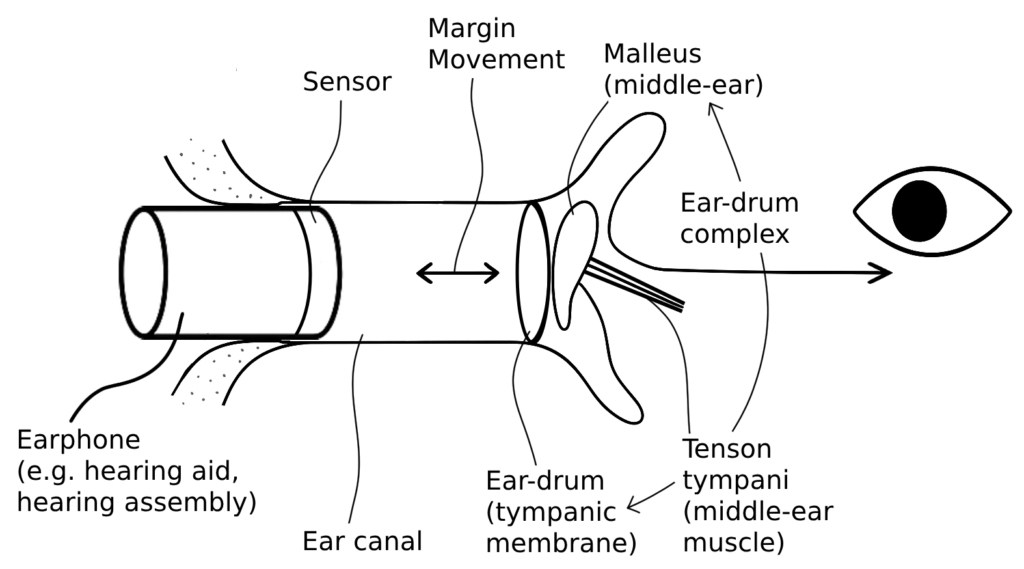

Ear-drums can shift in coordination with planned eye movements. As such, measurements of ear-canal pressure during pursuit gaze reveal that around 10 milliseconds before an eye movement begins, pressure decreases in the ear-canal on the side the eyes are preparing to move towards [1].

This suggests that the ear-drum on the same side is drawn inward, a motion caused by the contraction of the middle ear muscle known as the tensor tympani. Meanwhile, on the opposite side, the ear-drum moves outward due to relaxation of the corresponding tensor tympani muscle. Therefore, eye movement can be correlated, and further detected, by movements in ear structures.

Tensor Tympani and Direction Detection:

The tensor tympani is a muscle attached to the malleus, a middle ear bone that connects to the inner surface of the ear-drum. According to the invention, the combination of the ear-drum and malleus is referred to as “the ear-drum complex”. Subtle movements of the ear-drum caused by tensor tympani contraction can reveal the direction of eye movements. They may also indicate the direction of auditory attention when a person listens to sounds from one side without moving their eyes.

By tracking either eye direction or auditory focus, and detecting these movements, can allow purposeful control of a graphical, augmented reality, or virtual reality interface. In essence, eye movement detecting by sensors or “earphones” positioned in the ear, can be used to control external devices.

Earpiece-Based Interface Control:

The invention provides both an apparatus as well as a method for controlling interfaces and devices through earphones, enabling hands free and silent operation. This is because control is based on detecting directional movements linked to eye position or auditory focus, as well as voluntary movements of the middle ear muscles for dynamic and adjustable interface control.

The Invention:

The invention uses sensors embedded in earphones to detect movements associated with eye movements in two dimensions and depth of visual focus, as well as direction of auditory attention. Unlike traditional systems, these sensors are unaffected by talking, chewing, facial movements, or head motion, which may provide a whole host of beneficial applications.

The system may achieve accurate horizontal and vertical eye tracking without requiring eye imagers, but rather through in-ear sensors, or earpieces. By detecting eye convergence through earphone sensors, the invention enables three-dimensional control of devices, allowing user interactions based on both eye direction and depth of focus.

Movements of the ear-drum complex and margin are directly linked to specific eye activities. During convergent eye movements, such as when a user focuses on a nearby object while reading, a section of the ear-drum margin, and structures near the malleus move in coordination.

By contrast, horizontal and vertical eye movements cause different, independent movements of the malleus area and ear-drum margin. This creates distinguishable eye movements that can be detected, for example, to characterize different inputs or commands.

These distinct movement patterns enable the system to detect activities such as vertical gaze, convergence, return to central gaze, voluntary movements, and shifts in auditory attention.

By analyzing data from both the ear-drum complex and the ear-drum margin, the invention provides more accurate tracking of eye movements and auditory focus. It can determine both central and off-axis eye positions, and differences between the data from each ear help locate the position and depth of the user’s visual focus.

The processor and algorithm of the invention may enhance overall accuracy by performing three-dimensional analysis of eye movements, auditory attention direction, and visual focus location, while remaining resilient against disruption from facial movements, speech, or swallowing.

GB2598042B (GB1):

GB1 claims an apparatus for detecting ear structure movements or changes in order to control or affect the function of an associated device. The apparatus comprises:

- sensor means capable of being worn by a user to be located in or adjacent an ear-canal so as to fix a position of the sensor relative to a position of an ear-drum of the user; configured to detect and capture sensor data relating to middle-ear, ear-drum complex, ear-drum margin, and/or other ear structure movements or changes of characteristics; and means for processing and analysing sensor data from the sensor means;

wherein the means for processing and analysing sensor data from the sensor means includes one or more of the following:

- eye movement or directional auditory attention or focus data, by analysing data relating to a degree, speed, size of movement or change, or duration of movement of the middle-ear, ear-drum complex, ear-drum margin, and/or associated ear structures;

- voluntary control data, by analysing data relating to a degree, speed, size of movement or change, or duration of movement of the middle-ear, ear-drum complex, ear-drum margin, and/or associated ear structures;

such that, through identification of changes or differences in the sensor data, the apparatus is capable of controlling or affecting the function of the associated device.

The invention enables three-dimensional and multi-functional control of physical or virtual devices, whether nearby or remote, by detecting movements in the ear-canal structures like the ear-drum.

Data from these movements is processed to adjust interfaces based on the user’s intended visual or auditory focus, such as attention shifts or eye movements. It may also capture health-related data, like signs of oto-sclerosis, glue ear, ear-drum perforations, or abnormal tremors, aiding in disease monitoring.

The patent further discloses embodiments that include optical lenses that dynamically adjust focus, or simply switch between near and far vision in a bifocal setup, triggered by detecting convergence in the eyes, detected through in-ear muscle movement, that works in a similar way to a switch signal.

Optional features include that the sensor means may be configured to detect change in one or more of the following:

- movement of the ear-drum complex, or associated ear structures, through two or three dimensional analysis or image processing;

- distance from the sensor means;

- direction of movement, size of movement, degree of movement, duration of auditory canal, ear-drum margin, adjacent wall of auditory tube (ear-canal) and/or associated or other ear structures;

- colour of at least part of the ear-drum complex or related ear structures;

- frequency of vibration of at least part of the ear-drum complex;

- reflection;

- any other measure indicating movement of the ear-drum complex and/or associated structures;

- and/or any one or more of the above providing a discernible difference between different areas of the same ear structure or different ear structures, or a pair of sensors, each associated with a different ear of the user.

Further, the sensor means may include an image detector; thermal imaging detector; static or scanning laser detector; time of flight detector; LIDAR (laser imaging, detection, and ranging); laser triangulation; laser Doppler vibrometry; digital holography; optical coherence tomography; photoplethysmography (PPG) sensor, with or without oxygen saturation sensor; and/or ultrasonic detector.

Controlling or affecting the function of the associated device may include:

- operating as an intentional switch trigger;

- graduated or variable intentional control;

- two or more dimensional or variable control of user interfaces;

- controlling direction, amplitude and frequency of sound output of earphone device;

- optical focus control;

- multi-functional control of the same interface or different interfaces;

- change in state of a device;

- control of a digital, electronic of other device, or electronic interface, including those remote from the user;

- virtual or real movement control; and/or

- monitoring of ear-drum complex, ear-drum margin or other ear structure movements as a biometric measure of health, disease, physiological and behavioural activity.

The invention includes options such as using sensor data about eye movement, attention, or focus to control interfaces by detecting movements of the middle-ear. It may also include a second sensor worn in or near the other ear canal to gather more data.

By comparing data from both ears, the system can control or influence the connected device. The apparatus can also provide feedback to the user. Additionally, it may detect eye convergence, gaze focussing, depth of focus, and horizontal or vertical eye movements, either alone or in combination.

Method for Detecting Changes in Ear Structures:

The claimed method involves using a sensor positioned fully or partly in, or next to, the ear-canal to detect changes in ear structures like the ear-drum complex and ear-drum margin.

The sensor can be housed in devices worn like hearing aids, hearing assistance devices, or earphones. It monitors properties such as distance, position, movement, shape, or colour of the ear-canal structures. In some cases, the earphone device also includes a voluntary ear-drum control switch function.

The method for detecting movements or changes of ear structures (middle ear, eardrum complex, eardrum margin, or others) to control or affect an associated device, comprises:

- capturing sensor data from a sensor located in or adjacent the ear canal, fixed relative to the ear-drum;

- processing and analysing sensor data for eye movement, directional auditory attention, or voluntary control based on degree, speed, size, or duration of movement; and

- using identified changes or differences in the sensor data to control or affect device function.

The method may further include detecting changes in movement of ear structures (via 2D or 3D analysis or image processing); distance from the sensor; direction, speed, size, or duration of movement, position, or shape; colour or vibration frequency of ear structures; reflection characteristics; differences between different areas or structures, or between sensors associated with different ears.

The method may include controlling or affecting device function by triggering intentional switches; graduated or variable control; two- or multi-dimensional control of interfaces; controlling sound output parameters (direction, amplitude, frequency); optical focus control; multi-functional control of interfaces; changing device state; controlling digital, electronic, or remote devices; virtual or real movement control; monitoring ear structure movements as biometric indicators of health, disease, physiological, or behavioural activity.

The method may further comprise processing sensor data concerning eye movement or auditory attention to provide graduated, dynamic, or variable control based on ear structure movements; detecting voluntary ear structure movements for intentional control; receiving and comparing sensor data from both ears to control or affect device function. The method may be used to detect eye convergence, gaze focussing, depth of intended visual focus, and horizontal and/or vertical eye movements, or any combination thereof.

Conclusion:

The invention may integrate eye-tracking with dynamic control of associated devices, addressing vergence-accommodation conflict by adjusting display focus based on eye convergence. The invention may utilize sensors embedded in earpieces to track both eye movement and auditory attention in two dimensions, enabling intuitive, hands-free control of virtual or physical interfaces.

By analyzing the movements of the ear-drum complex, the system accurately detects eye convergence, direction, and depth of focus, allowing for precise three-dimensional control of devices. This approach enhances interaction with environments, offering a more natural and responsive experience, free from the limitations of traditional tracking systems.

Recent News:

EarSwitch® is a startup developed from research at University of Bath, and recently showcased its new EarControl technology at CES, as reported in an announcement on the University’s website on 9 January 2024 [2].

The report includes that the wearable in-ear device, EarControl™ may help people with disabilities, such as motor neurone disease (MND), communicate by detecting muscle movements in the ear to control assistive devices like a keyboard. The technology works by tracking the movement of the tensor tympani muscle, which can be controlled even by those with degenerative conditions, potentially offering a longer period of independence.

Founded by Dr. Nick Gompertz, EarSwitch has received over £1.5 million in funding from the National Institute for Health and Care Research to develop the product into a medical device, indicating the promise of this technology in the Medical Devices sector. With support from various partners, EarSwitch also aims to improve the quality of life for those with neurological conditions.

EarSwitch’s innovative in-ear technology may offer a groundbreaking solution for controlling assistive devices through subtle ear muscle movements, benefiting not just individuals with neurological conditions, but may provide whole host of other benefits and potential applications.

References:

[1] UK Granted Patent, GB2598042B – https://worldwide.espacenet.com/patent/search/family/077249839/publication/GB2598042B?q=GB2598042B

[2] EarSwitch® in-ear communication device showcased at CES – https://www.bath.ac.uk/announcements/earswitch-in-ear-communication-device-showcased-at-ces/

The Patent Eye blog offers a glimpse into the future by showcasing newly granted patents. Join us as we explore the latest innovations shaping our world.

The Patent Eye blog is made available by Alphabet Intellectual Property for informational purposes, in particular, sharing of newly patented technology from granted and published patents available in the public domain. It is not meant to convey legal position on behalf of any client, nor is it intended to convey specific legal advice.

Craving intellectual stimulus? Peer into the insights brought to you by The Patent Eye and explore our latest articles.